The Best of lstopo

Here are lstopo graphical outputs on miscellaneous platforms, from common servers to huge many-core platforms

and strange machines.

If your output is even nicer, please send it together with the XML!

Click on images to open them.

Common Servers

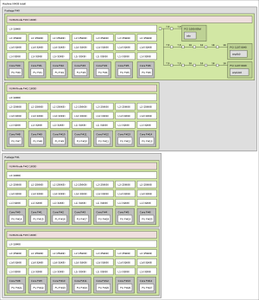

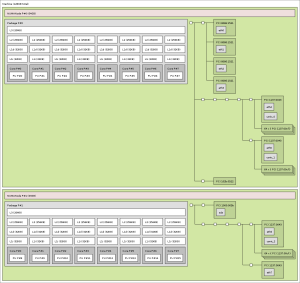

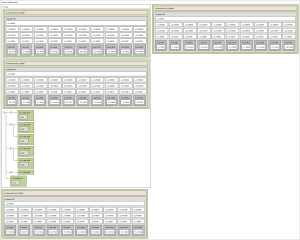

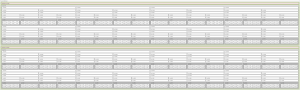

2x Xeon SapphireRapids Max 9460 (from 2023, with hwloc v2.10).

Processors are configured in SubNUMA-Cluster mode, hence showing 4 DRAM NUMA nodes and 4 HBMs in each package.

Large sets of cores (10 here) are factorized::

|

(link to here)

|

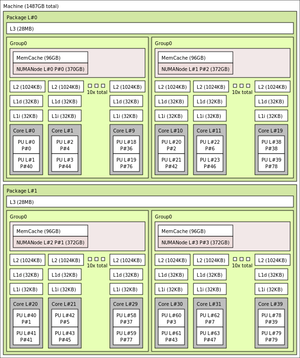

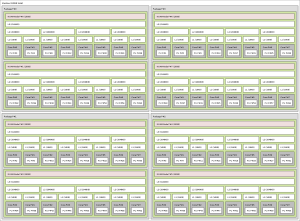

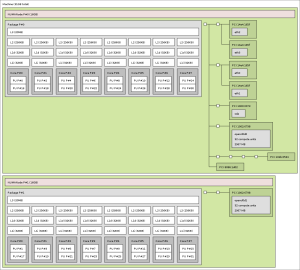

2x Xeon CascadeLake 6230 with DDR as a cache in front of NVDIMMs (from 2019, with hwloc v2.1).

Processors are configured in SubNUMA-Cluster mode which shows 2 NUMA nodes per package.

Large sets of cores (10 here) are factorized::

|

(link to here)

|

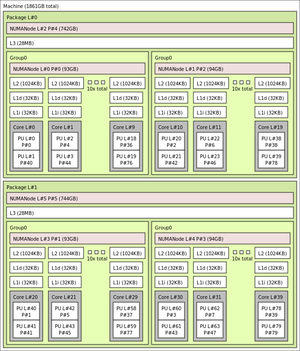

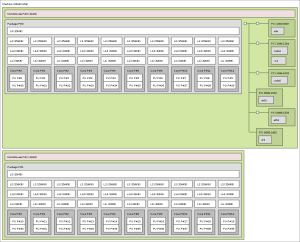

2x Xeon CascadeLake 6230 with NVDIMMs as separate NUMA nodes (from 2019, with hwloc v2.1).:

|

(link to here)

|

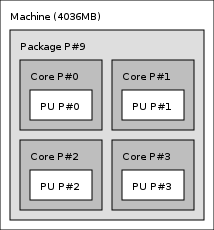

2x Xeon Clovertown E5345 (from 2007, with hwloc v1.11):

|

(link to here)

|

2x Xeon Haswell-EP E5-2683v3 (from 2014, with hwloc v1.11).

Processors are configured in Cluster-on-Die mode which shows 2 NUMA nodes per package:

|

(link to here)

|

4x Opteron Magny-Cours 6272 (from 2012, with hwloc v1.11):

|

(link to here)

|

8x Opteron Istanbul 8439SE (from 2010, with hwloc v1.11):

|

(link to here)

|

Servers with I/O devices

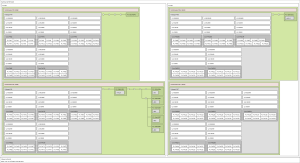

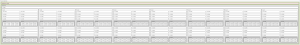

2x Xeon CascadeLake 6230 + 1x NVDIMM exposed as DAX + 1x NVDIMM exposed as a PMEM block device (from 2019, with hwloc v2.1):

|

(link to here)

|

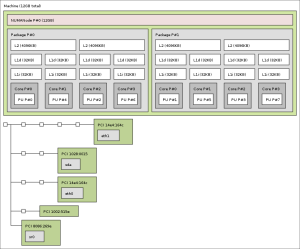

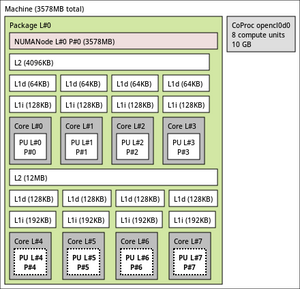

2x Xeon Sandy-Bridge-EP E5-2680 + 1x Xeon Phi + 1x InfiniBand HCA (from 2013, with hwloc v1.11):

|

(link to here)

|

2x Xeon Sandy-Bridge-EP E5-2690 + 2x Cisco usNIC (from 2013, with hwloc v1.11).

Many identical unused usNIC PCI virtual functions are collapsed in the graphical output:

|

(link to here)

|

2x Xeon Sandy-Bridge-EP E5-2650 + 2x AMD GPUs (from 2013, with hwloc v1.11).

GPUs are reported as OpenCL devices:

|

(link to here)

|

2x Xeon Ivy-Bridge-EP E5-2680v2 + 2x NVIDIA GPUs (from 2013, with hwloc v1.11).

GPUs are reported as CUDA devices and X11 display :1.0:

|

(link to here)

|

2x Xeon Westmere-EP X5650 + 8x NVIDIA GPUs appearing as CUDA devices (Tyan S7015 from 2010, with hwloc v1.11):

|

(link to here)

|

4x Xeon Westmere-EX E7-4870 (Dell PowerEdge R910 from 2012, with hwloc v1.11).

A Group of two packages is used to better report the I/O-affinity of the PCI bus:

|

(link to here)

|

2x Power8 with 2 NUMA node each + 1x InfiniBand HCA (IBM Power S822L from 2015, with hwloc v1.11).

20 cores and 160 hardware threads total:

|

(link to here)

|

Big platforms (make sure you have a giant screen)

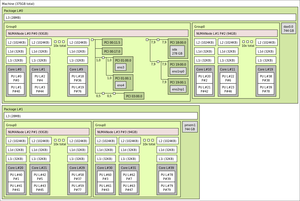

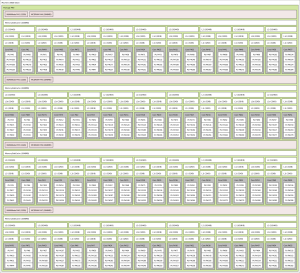

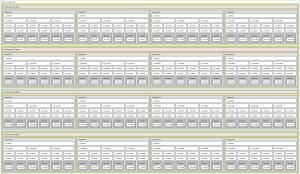

AMD Trento CPU with 4 dual MI250 GPUs.

Each half GPU is connected to half a NUMA node through Infinity Fabric.

There is also a Cray network interface in each dual-GPU module.

This node is used in supercomputers such as Frontier and Adastra.:

|

(link to here)

|

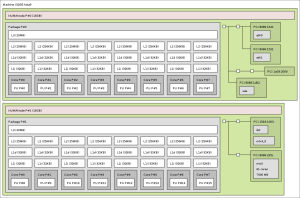

Intel Knights Landing Xeon Phi.

MCDRAM is configured in Hybrid mode (half as a memory-side cache, half as an additional high-bandwidth NUMA node).

SubNUMA clustering (SNC-4) is also enabled, for a total of 8 NUMA nodes.

72 cores and 288 threads (from 2017, with hwloc 2.0, which represents DDR and MCDRAM locality properly).:

|

(link to here)

|

Intel Knights Landing Xeon Phi.

Same SNC-4 Hybrid configuration as above (from 2017, with hwloc 1.11, which could not expose the memory hierarchy perfectly).:

|

(link to here)

|

Dual-socket Oracle SPARC T7 (from 2016, with hwloc v1.11).

32 cores in each socket, with 8 hardware threads each:

|

(link to here)

|

Oracle SPARC T8 (from 2017, with hwloc 1.11).

32 cores with 8 hardware threads each:

|

(link to here)

|

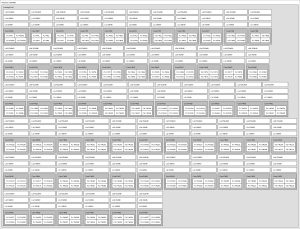

16x Xeon Dunnington X7460 with IBM custom NUMA interconnect (IBM x3950 M2 from 2009, with hwloc v1.11).

96 cores total:

|

(link to here)

|

20x Xeon Westmere-EX E7-8837 (SGI Altix UV 100 from 2012, with hwloc v1.11).

160 cores total.

The matrix of distances between NUMA nodes is used to improve the topology by creating Groups of two processors, which actually correspond to physical Blades:

|

(link to here)

|

64x Itanium-2 Montvale 9140N (HP Superdome SX2000 from 2009, with hwloc v1.11).

128 cores total, and one additional CPU-less NUMA node.

This old Linux kernel does not report cache information:

|

(link to here)

|

128x Itanium-2 Montvale 9150M (SGI Altix 4700 from 2008, with hwloc v1.11).

256 cores total.

Two levels of Groups are created based on the NUMA distance matrix. They corresponds to SGI racks of 4 IRUs containing 4 Blades each.

This old Linux kernel does not report cache information:

|

(link to here)

|

48x Xeon Nehalem-EX X7560 with distance-based groups (SGI Altix UV1000 from 2010, with hwloc v1.11).

384 cores and 768 hyperthreads total:

|

(link to here)

|

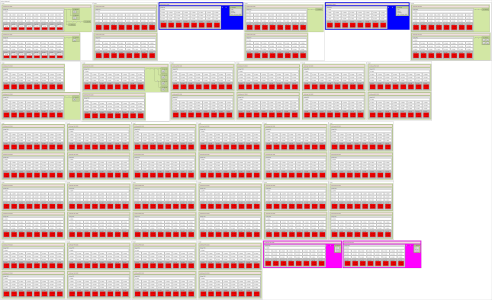

56x Xeon Sandy-Bridge-EP E5-4640 + 2x NVIDIA GPUs + 2x Xeon Phi (SGI Altix UV2000 from 2014, with hwloc v1.11).

448 cores and 896 hyperthreads total.

The machine is made of 26 blades of 2 processors (appearing as Group0 white boxes),2 blades with 1 processor and 1 NVIDIA GPU (blue boxes),and 2 blades with 1 processor and 1 Xeon Phi (pink boxes).

Red hyperthreads are unavailable to the current user due to Linux cgroups.

Blue and pink blades were colored using the lstopoStyle custom attribute:

|

(link to here)

|

16x Power7 (IBM Power 780 from 2011, with hwloc v1.11).

64 cores and 256 hardware threads total.

The buggy hardware Device-Tree reports 8 single-core packages instead of each octo-core package:

|

(link to here)

|

Random and unusual platforms

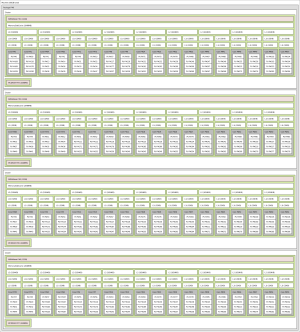

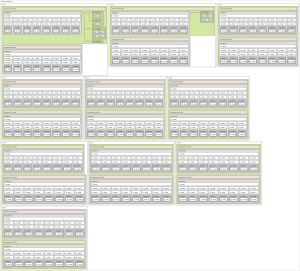

Apple Mac Mini with M1 hybrid processor (from 2022, with hwloc v2.9).

4 E-cores on top (energy efficient), 4 P-cores below (performance, with bigger caches).

The machine has 16GB of memory but most of it is given to the GPU (as shown in the OpenCL device):

|

(link to here)

|

Intel Core i7-1370P RaptorLake hybrid processor (from 2023, with hwloc v2.10).

6 P-cores on the left (performance hungry, with bigger caches), 6 E-cores on the right (energy efficient).:

|

(link to here)

|

Xeon Phi Knights Corner 7120 (from 2014, with hwloc v1.11):

|

(link to here)

|

PlayStation 3 (from 2008, with hwloc v1.11).

The old Linux kernel does not report cache information:

|

(link to here)

|

IBM/S390 (from 2010, with hwloc v1.11)

Packages are grouped within Books (name of IBM mainframe modular cards):

|

(link to here)

|

Miscellaneous

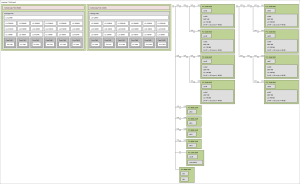

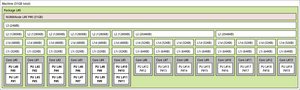

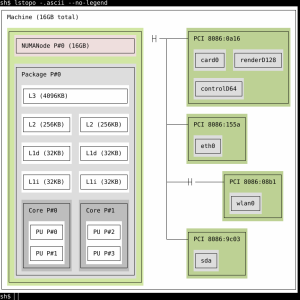

ASCII Art output in case you don't have X11 available.

Generated with lstopo -.ascii with hwloc v1.11, or with lstopo -.txt in older releases:

|

(link to here)

|

|